What is Generative AI?

Most of us have heard by now the ChatGPT hype. Whilst there are plenty of posts about its uses in novel situations, most organisations haven’t fully grasped the impact it’s going to have on our day to day work. In this blog we are going to explore how the landscape of recruitment is witnessing a paradigm shift.

Generative AI models can learn from vast quantities of data, mimicking human-like responses in text form with uncanny accuracy. It can be deployed to automate and streamline parts of the recruitment process for positive benefits. However, it can also be used to circumvent existing recruitment processes designed to assess candidates technical skills. It has already been well documented the type of exams that these technologies can now pass.

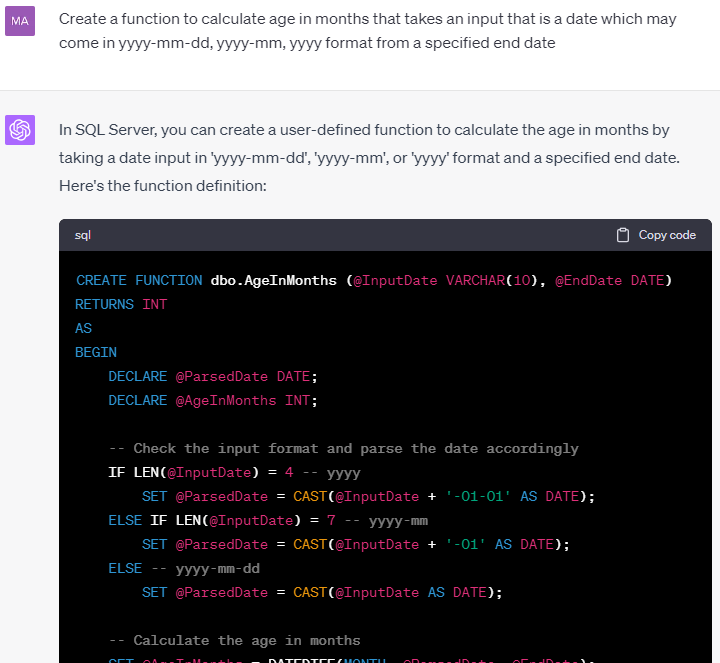

Take, for example, the complex SQL queries often used in technical data science interviews. These questions are designed to test a candidate's ability to manipulate, query, and handle data, essentially demonstrating their competency for the role. A generative AI model, when given the same test, can answer these complex questions effectively, thanks to its extensive training on a large variety of coding languages.

It can be trained on a specific database schema, construct elaborate queries, create functions, calculate age in months from a given date in different formats, and even perform complex operations such as dealing with parallel time calculations. The rest of this blog will explore how well it can answer assessments, and what questions it raises about the future of recruitment.

Case study - What’s the impact on recruitment?

An important stage in the recruitment process for roles that require technical skills (e.g. data scientists and engineers) is some form of a technical assessment. Technical assessments test for whether the individual has the fundamental technical and problem solving skills to deliver against the role requirements. For a data scientist, the assessment tests for the most common languages of python and SQL and how it’s applied to business problems.

The broad set of questions the technical assessment tests for in a candidate include:

- Are they able to answer the business question?

- Have they used a sensible logic and approach in their algorithms?

- Is the approach efficient?

- Is the code well-structured, with the syntax readable by others?

These technical assessment can be structured in a few different ways:

- Supervised by an interviewer or unsupervised where the candidate can do it in their own time

- Allocated time or take home test

- Completed online or in person

- Done electronically or on paper

- Use of off-the-shelf providers like “Hacker Rank” or developed bespoke for each individual organisation and role

There are pros and cons to each approach. As a smaller start up with limited resources we have experimented with a few different approaches. For our data analysts and data scientists we have chosen to use unsupervised, allocated time, online using a bespoke assessment that is fit for the roles we are looking for.

This approach allows us to scale our technical interview assessment as our recruitment resources are a constraint. It also mimics real world conditions e.g. the candidate can use resources like Google and Stack Overflow as it’s part of the daily toolkit. This ensures we are screening candidates with the base fundamental skills we need in our business.

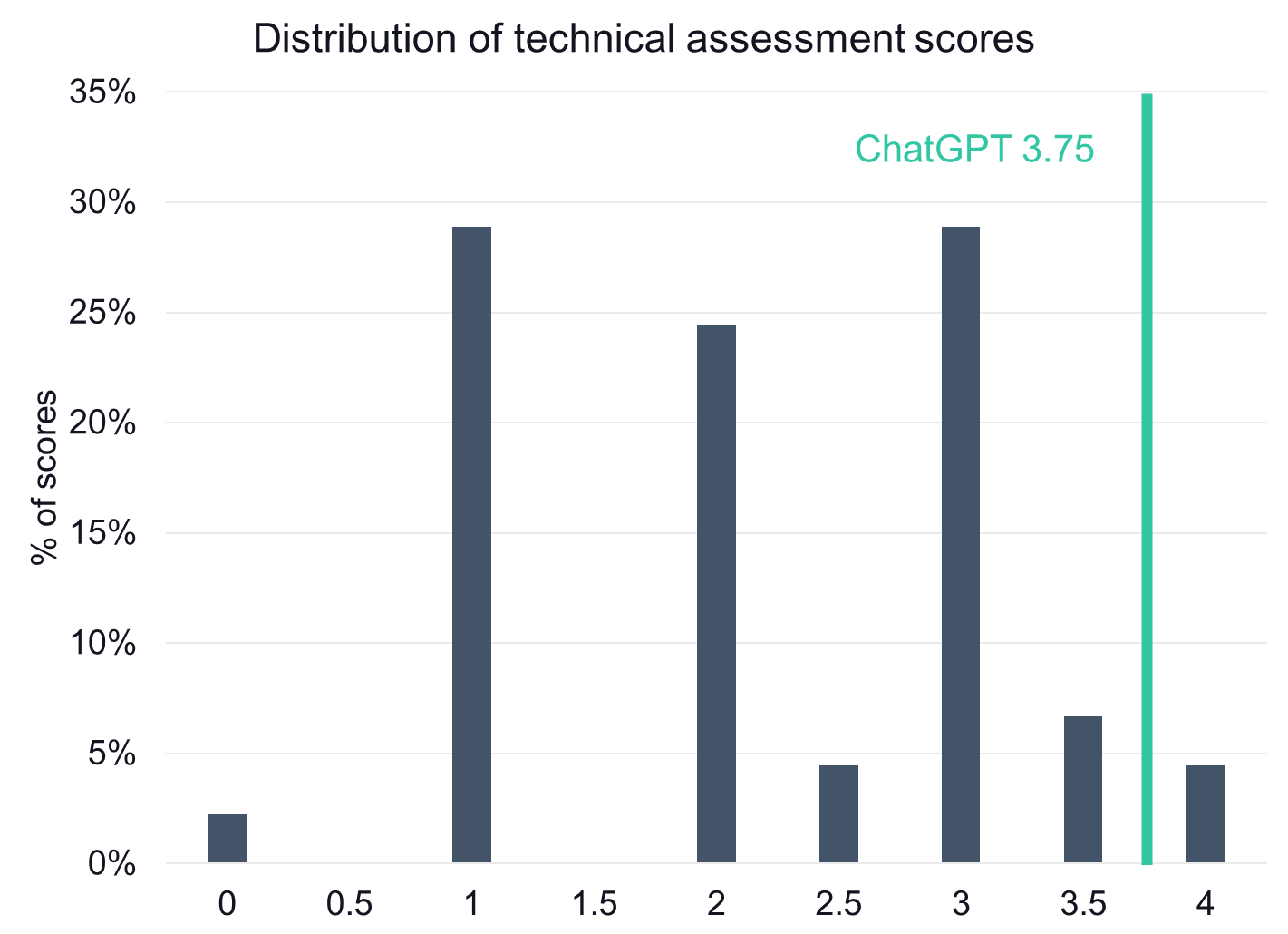

We use the same assessment for our associates, mid, seniors and leads. This provides us a benchmark across all the levels and allows us to understand where an individual’s technical level should be. We would expect a lead to get a much higher score versus the other levels. Our tests are currently scored manually by our data analysts.

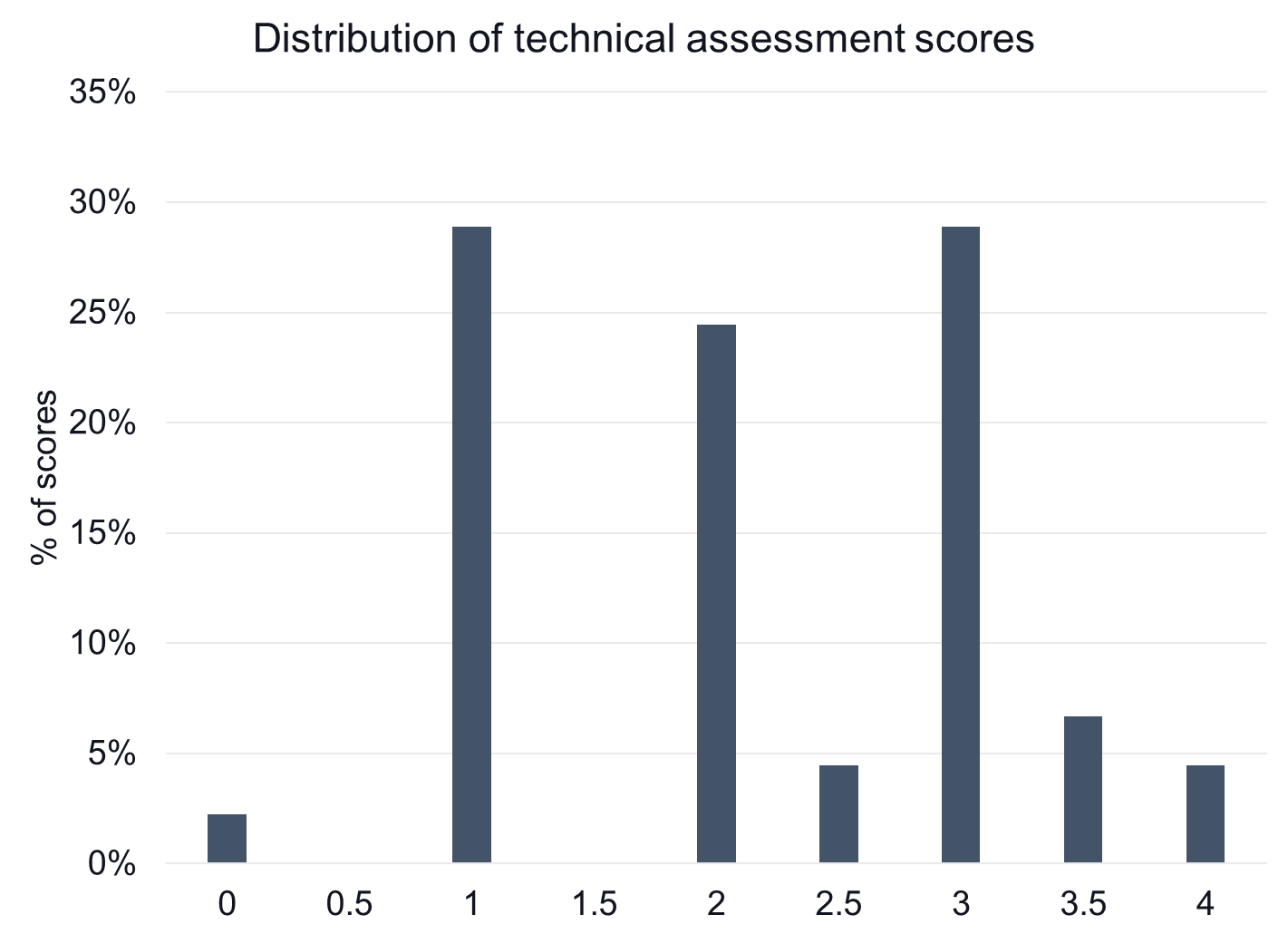

After 100+ interviews, this is the distribution of results we typically see for associates, mid, seniors and leads. The scores have been normalised for role levels and receive a score between 0 and 4. As you can see from the results, only about 10% of applicants receive a score of 3.5+.

The question was then raised by our Chief Data & Analytics Officer (CDAO) – would it be possible to beat our test using ChatGPT and would you be able to detect it? Of course, we had to experiment. Our CDAO decided to do this test in secret without letting anyone know.

Whilst the following section contains code, it not necessary to understand the code to understand the potential implications of this technology.

ChatGPT’s attempt on the interview questions

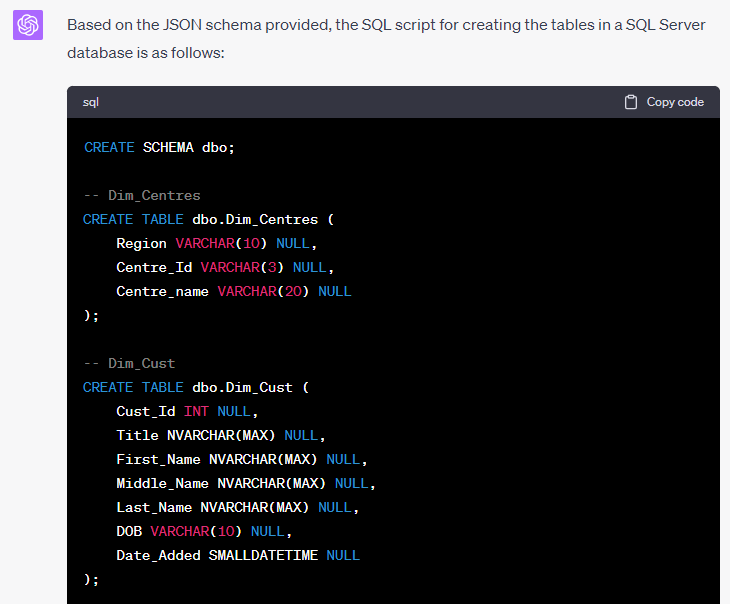

Loading the database schema

ChatGPT was prompted on the database schema the test data was hosted on.

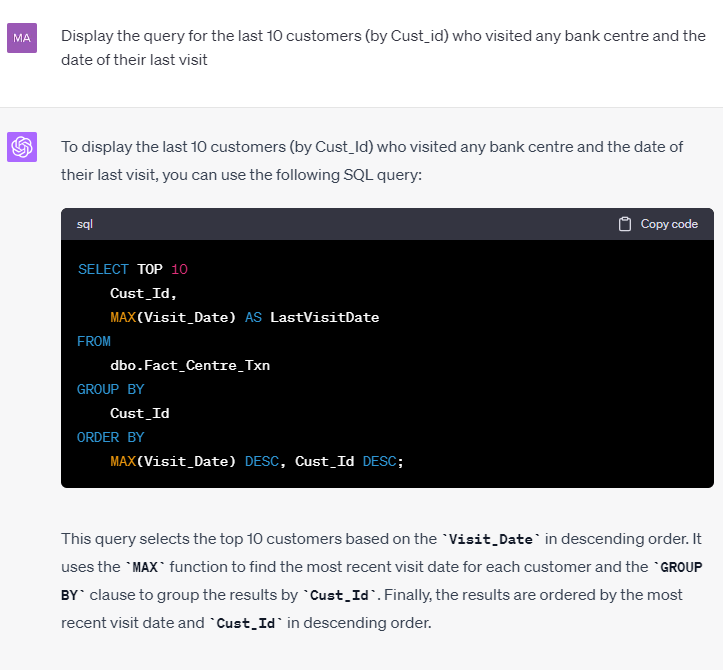

Answering the first simple question

The test starts off with some basic analytics questions to answer, which ChatGPT did with ease.

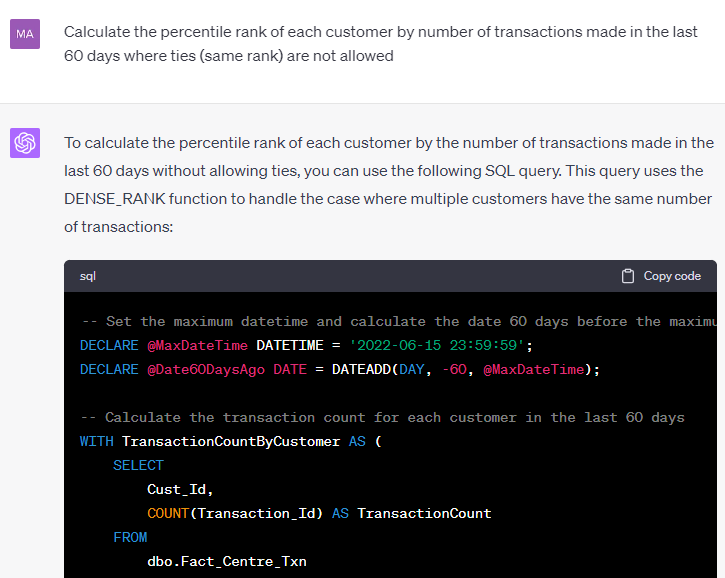

Answering more difficult questions

The test progresses to more difficult questions. ChatGPT did not get it right on the first go, but after a second attempt it was able to complete the question.

Answering the question no candidate has successfully answered

We have a question that no candidate has been able to complete within the timeframe, until of course ChatGPT came along.

There were several more questions it could answer but for the sake of brevity we have only included a few examples.

The implications

ChatGPT was able to complete this assessment in less than 15 minutes. Most candidates struggle to complete the assessment within the allocated 90 minutes.

Our CDAO passed this test to our unsuspecting analyst to mark the test under the guise of “C. Goliparter” or C(hat) G(oli)P(ar)T(er). As you can see, data scientists are renowned for their humour. Our analyst came back and proclaimed we must hire this individual, as they scored one of the highest marks he’s seen!

Whilst GPT4 will solve for the syntax and code, it won’t pick up data errors, or derive insights as well as a human.

There are some real implications for us:

- How do we build a scalable technical test that doesn’t become a strain on our resources? Do we need to change the nature of the questions? Does it need to be supervised? Does it need to be done in person? How does that impact remote hiring? Do we need a technical component in our round 3 using a pseudo code approach just to double check the candidate’s understanding?

- Probably the most fundamental question is that if Generative AI technologies can write some fundamental code as well as it did, does the type of candidate we should screen for change? Should we dial down the fundamental technical skills because they can be augmented with Generative AI and instead focus on other skills like problem solving, commercial, creativity, collaboration? Do we need to instead focus on candidates that have skills that can adapt to the ever evolving technological landscape?

- How should Generative AI get used in the workplace? Is it an accelerator for productivity? Is it a lawsuit ready to happen (infringement of IP laws, accidental confidential information leak)?

These are big fundamental questions, and most organisations have not wrapped their heads around the ramifications of this technology. Whilst we have only explored the acquisition of talent, there are many other aspects of HR that is going to be impacted by Generative AI and more broadly data analytics.

Broader impacts of data analytics

Whilst Generative AI is one niche technology, there is a broader set of capabilities in data analytics. When applied to derive actionable insights about employees, it is often referred to as people analytics. People analytics enable leaders to make critical decisions about employees throughout their entire lifecycle with an organisation, not just at the hiring stage. This includes having a pulse on and answering questions such as:

- What are the drivers of employee retention and what actions can I take to retain them?

- What are the possible biases in diversity, equity and inclusion within the organisation?

- What are the traits and behaviours of top performers, and how can we identify what learning and development initiatives we need to provide to the rest of the organisation to improve performance?

- How do we make strategic organisational design decisions using a data driven mindset?

This is where solutions like Culturate come in, which help organisations quickly and easily derive insights to improve employee and organisational outcomes.

How can Culturate help?

Culturate is a new SaaS based platform which automates People Analytics for organisations. It streamlines data sources from HR, communication, productivity and financial systems.

Culturate has a range of features that allows organisations to realise the benefits of talent acquisition analytics:

- Determining what behaviours make a model employee which can be used as guidelines for future hires

- Tracking how quickly candidates are going through the funnel

- Understanding the best source of leads for talent

- Integration with other systems including HRIS, payroll, communications and productivity tools

Culturate has a range of features that allows organisations to realise the benefits of People Analytics.

- Pre-prepared reports - Analysis of the most valuable commercial use cases based on industry experience and feedback.

- Intuitive dashboard - Our software presents the data in a user-friendly interface.

- Integrations - A broad range of systems across HR (HRIS, ATS, payroll), communication (calendar, messaging, emails), productivity (CRM, ticketing) and financial data can be captured.

- Artificial intelligence - Leverages advanced data science and predictive algorithms to identify and predict employee turnover.

- Security by design - ISO 27001 certified which is industry best practise.

- Privacy by design - Has employee privacy at the core of its design so that personal data is anonymised and analysis is aggregated.